Let’s look at the requirements to start with

⚙️📌 Functional Requirements

- Tweet - allow users to post text, images, video, links, etc

- Re-Tweet - allow users to share someone's tweets

- Follow/unfollow

- Search

⚙️📌 Non Functional Requirements

- Read Heavy

- Fast Rendering

- Fast Tweet

- The system should be scalable and efficient.

👥🧑🤝🧑 Types Of Users

- Famous Users - celebrities, sportspeople, politicians, or business leaders

- Active Users

- Live Users

- Passive Users

- Inactive Users

🔍👀 Capacity Estimation for Twitter System Design

Let us assume we have 1 billion total users with 200 million daily active users(DAU). and on average each user tweets 5 times a day

200M * 5 tweets = 1B/day

Tweets can also contain media such as images, or videos. we can assume that 10 percent of tweets are media files shared by users.

10% * 1B = 100M/day

1 billion Requests per day translate into 12k requests per second.

1B / (24 hrs * 3600 seconds) = 12k requests/seconds

Let's assume each message on average is 100 bytes, we will require about 100 GB of database storage every day.

1 billion * 100 bytes = 100 GB/day

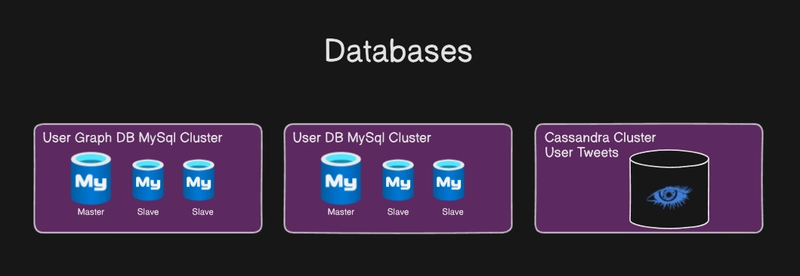

🗄️💾 Data Storage

User accounts - User data like username, email, password, profile

picture, bio, etc. in a relational database like (PostgreSQL)Tweets - Store tweets in a separate table within the same database,

including tweet content, author

ID, timestamp, hashtags, mentions, retweets, replies, etcFollow relationships: Use a separate table to map followers and

followers, allowing efficient retrieval of user feeds.Additional data: Store media assets like images or videos in a

dedicated storage system like S3 and reference them in the tweet table.

📝 Note: Remember how Twitter is a very read-heavy system? Well, while designing a read-heavy system, we need to make sure that we are precomputing and caching as much as we can to keep the latency as low as possible.

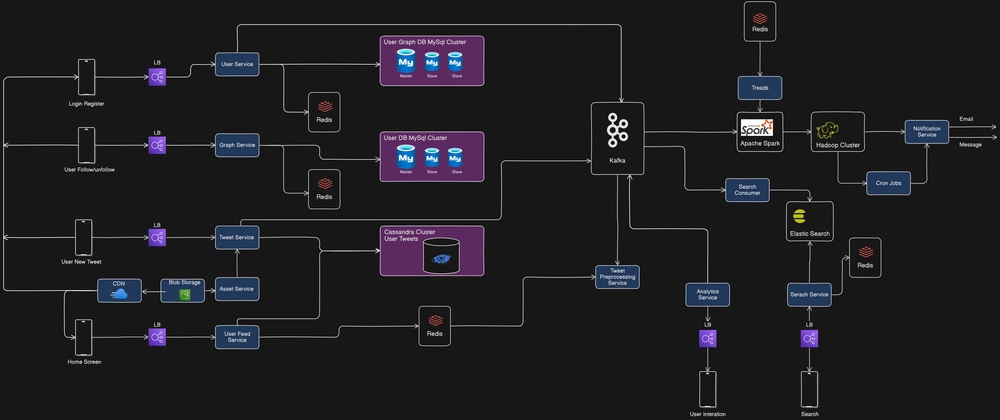

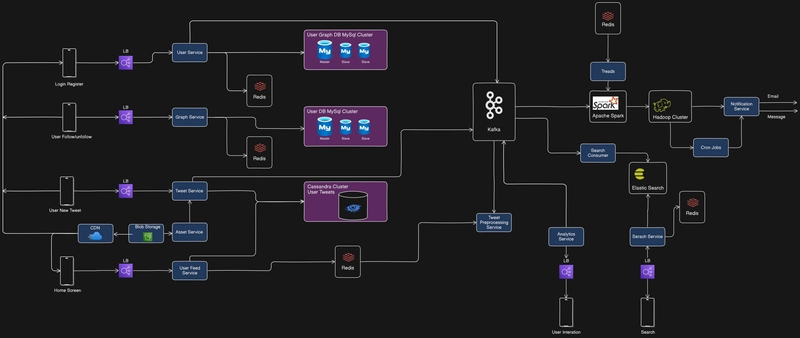

🔥📈 High Level Design

View High-Quality Image - Download

🔹 Register & Login Flow 🔑👤

When a user registers or logs in, the request goes through a Load Balancer (LB) to the User Service. The service interacts with the User DB MySQL Cluster, which stores authentication details. To speed up login verification, Redis is used for caching user session tokens. After authentication, a session or JWT is issued to the user for subsequent requests.

🔹 User Follow/Unfollow Flow 🔄👥

When a user follows or unfollows someone, the request is sent via the LB to the Graph Service, which manages user relationships. This service updates the User Graph DB (MySQL Cluster) and caches frequently accessed follow data in Redis to reduce DB queries. Updates to the user’s feed can be sent to Kafka for processing, ensuring scalability.

🔹 Tweet Flow 📝📢

When a user posts a new tweet, the request is handled by the Tweet Service, which stores the tweet in the Cassandra Cluster (User Tweets DB) for scalability. If the tweet contains media, it is stored in Blob Storage and served via the CDN. The tweet event is also pushed to Kafka, where different consumers (such as Tweet Preprocessing Service and Analytics Service) process it for engagement tracking and recommendations.

🔹 Search & Analytics Flow 🔍📊

The Search Service queries Elasticsearch, which is updated via the Search Consumer that listens to Kafka events. To improve performance, Redis caches frequently searched terms and trending topics. The Analytics Service tracks user interactions and forwards insights to Apache Spark, which processes large-scale data and stores it in a Hadoop Cluster. Processed insights are then used to enhance recommendations and notifications.

💯 Conclusion

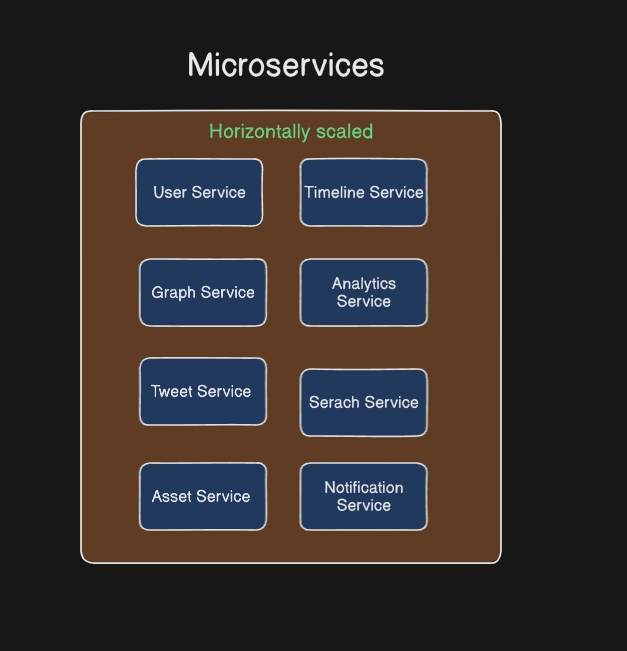

This system is well-architected with horizontal scalability, event-driven processing, and efficient caching strategies! 🚀